This document contains only my personal opinions and calls of judgement, and where any comment is made as to the quality of anybody's work, the comment is an opinion, in my judgement.

[file this blog page at: digg del.icio.us Technorati]

The UNIX way

of thinking has been largely

lost in cacophony of misunderstandings and unreflecting

shortcuts, but it can be still relevant when explained, and

recently it occurred to me that part of it can be explained

with a simple example, a command line to suspend (SIGSTP

) the Firefox web browser (because it leaks

memory and website scripts can loop) overnight, with some

examples:

killall -STOP firefoxkill -STOP $(pidof firefox)pidof firefox | xargs kill -STOPIt is possible to argue that pidof should be replaced by a more elementary pipeline like:

ps ... | sed 's/^ \+//; s/ \+/\t/g' \ | egrep ':.. [^ ]*firefox' | cut -f 1

But I like better something like pidof as it is a simple single purpose command. However as the pipeline above suggests, pidof as it is is rather not-UNIXy because it embeds a version of ps that is rather limited in functionality, when it should instead be a filter for ps output.

But recent versions of ps already do some filtering, so pidof firefox should be replaced by ps -o pid= -C firefox (or some variant). Of course a ps that contains its own filtering logic is not-UNIXy itself, but rather less than pidof itself, so as a compromise the final version is:

ps -o pid= -C firefox | xargs kill -STOP

Discussing the topic of economies of scale in computing infrastructure I was arguing that beyond a fairly low level of scale they become quite small, as the high prices of computing infrastructure-as-a-service from the big providers demonstrate.

The question is why that is so, and the answer is important in general: economies of scale as such as a rule don't exist, scale by itself does not bring any greater efficiency. What brings higher efficiency is specialization, that is economies of inflexibility.

Consider making car body panels or door frames: you can make them manually or by using a huge press (videos: 1, 2) that flattens a steel sheet in the desired shape in one go. This enables production at scale. It can be argued that this is also enabled by production at scale, because the cost of the gigantic press is only justified for long production runs. But this is begging the question: which is why would a high scale of production justify the cost of the press, when it would not if the press had the same efficiency as manually hammering a sheet of metal into shape. Having a huge press instead of many workers, or several small presses, would not justify the cost of a big press.

But a large press has better efficiency, not because of the larger scale of production, but because it is highly specialized: given a mould for a car door it can only flatten steel ingots into that car door frame, unlike a worker or a smaller press, and it can take weeks to make a news mould and fit the press with it. In other words it is not the scale of the production, or even the scale of the press in itself, that delivers the benefit, but the high specialization of the press that allows construction of a very efficient machine.

Note: a huge press is more efficient than a smaller one because only a huge pressure on a large surface can flatten an ingot into a car door frame in one go.

But high specialization usually results in high inflexiblity, thus a large production run enables ther cost of vcery efficient but inflexible machine, where many smaller runs don't make that investment worthwhile, even if the more expensive specialized system would be more efficient in technical terms.

Note: that it is specialization that

results in greater efficiency, rather than scale itself, was

recognized by Adam Smith who called it division of labour

in his famous example of a

pin factory. But assigning the various steps of manufacturing

a pin to specialized worker is inflexible, and thus justified

only by relatively large production runs of identical pins,

and once every specialized worker is fully busy, there are no

more economies of specialization to achieve, doubling the

number of workers just doubles the output.

The same applies even more strongly to computing infrastructure, and its opposite too :-):

Shenzen priceapplies to any medium-large customer).

economies of scalein their use, because the resulting systems are all pretty much the same: the computers (both hw and sw) in a data center with 1,000 systems are going to be pretty much the same as those in one with 10,000 systems or 100,000 systems, because the components they are built from are being manufactured in production runs of millions a year.

cloudcomputing for

IaaSspecialization of the systems is particularly hard to achieve as they must be as generic as possible to be compatible with any workload, so

economies of scaledon't apply very much to the systems. There are claims that economies of specialization are possible in the data center building itself, but beyond a fairly small scale electricity supply, cooling systems, and their maintenance cannot be specialized further.

This has been noted elsewhere: when LC_CTYPE includes a UTF character set grep -i is very expensive as per these cases:

$ cat $FILES | time sh -c 'LC_CTYPE=en_GB.UTF-8 grep -i aturana' Command exited with non-zero status 1 14.94user 0.03system 0:15.00elapsed 99%CPU (0avgtext+0avgdata 2492maxresident)k 0inputs+0outputs (0major+210minor)pagefaults 0swaps $ cat $FILES | time sh -c 'LC_CTYPE=C.UTF-8 grep -i aturana' Command exited with non-zero status 1 14.96user 0.15system 0:15.12elapsed 99%CPU (0avgtext+0avgdata 2292maxresident)k 416inputs+0outputs (10major+205minor)pagefaults 0swaps

$ cat $FILES | time sh -c 'LC_CTYPE=en_GB grep -i aturana' Command exited with non-zero status 1 0.63user 0.05system 0:00.70elapsed 98%CPU (0avgtext+0avgdata 2288maxresident)k 0inputs+0outputs (0major+200minor)pagefaults 0swaps $ cat $FILES | time sh -c 'LC_CTYPE=C grep -i aturana' Command exited with non-zero status 1 0.61user 0.04system 0:00.66elapsed 98%CPU (0avgtext+0avgdata 1912maxresident)k 0inputs+0outputs (0major+181minor)pagefaults 0swaps

$ cat $FILES | time sh -c 'tr A-Z a-z | grep aturana' Command exited with non-zero status 1 0.33user 0.37system 0:00.38elapsed 184%CPU (0avgtext+0avgdata 2424maxresident)k 0inputs+0outputs (0major+280minor)pagefaults 0swaps

The time does not depend on the language locale but on the character code. What is manifestly happening is that with UTF grep is lowercasing with a conversion table that includes all upper case characters in the enormous UTF code space, and that is quite expensive indeed, something a bit unexpected like the previous case where using the en_GB collating sequence resulted in non-traditional sorting order.

Given that I very rarely care about lowercasing other than as the traditional tr A-Z a-z equivalent I have decided for now to set LC_CTYPE to C, as well as LC_COLLATE and LC_NUMERIC.While duplicating somew directories with rsync -i -axAXHO (where the AX options request the duplication of attributes and extended attributes) to a filetree of a type that does not support extended attributes I was surprised to see that some files had extended attributes. It turns out that:

If you download and save a file with Chromium (even in incognito mode), it saves potentially sensitive metadata in a way that's completely unknown to almost all users, even highly technical ones:

user.xdg.referrer.url: ... user.xdg.origin.url: ...

Which is entirely undocumented, and may even be useful. I also saw that the Baloo indexer for KDE may add the user.baloo.rating extended attribute, but that is at least documented.

Entertaining article about cloud

and colocation

hosting costs

which is briefly summarized as:

So how much does hosting cost these days? To answer that apparently trivial question, Dyachuk presented a detailed analysis made from a spreadsheet that compares the costs of "colocation" (running your own hardware in somebody else's data center) versus those of hosting in the cloud. For the latter, Dyachuk chose Amazon Web Services (AWS) as a standard, reminding the audience that "63% of Kubernetes deployments actually run off AWS". Dyachuk focused only on the cloud and colocation services, discarding the option of building your own data center as too complex and expensive. The question is whether it still makes sense to operate your own servers when, as Dyachuk explained, "CPU and memory have become a utility", a transition that Kubernetes is also helping push forward.

Just the premise that building your own datacenter is to say the least contentious: for a small set of a few dozen servers it is usually very easy to find space for 1-2 racks, and for larger datacenters there are plenty of places on industrial estates that are suitable. The costs can be quite low, because contrary to myth the big hosting companies don't have significant economies of scale or scope. But the mention of Kubernetes is a powerful hint: the piece promotes Kubernetes, and in effect does so by assuming workloads for which Kubernetes works well. Indeed the argument applies to workloads that work well with Kubernetes, which is not necessarily many:

Kubernetes helps with security upgrades, in that it provides a self-healing mechanism to automatically re-provision failed services or rotate nodes when rebooting. This changes hardware designs; instead of building custom, application-specific machines, system administrators now deploy large, general-purpose servers that use virtualization technologies to host arbitrary applications in high-density clusters.

That applies only in the simple case where systems are

essentially stateless and interchangeable, which is the simple

case of most content delivery networks. But in that case using

a large server and partitioning it into many smaller servers

each with essentially the same system and application seems to

me rather futile, because Kubernetes is an effect a system to

manage a number of instance of the same live

CD

type of system.

When presenting his calculations, Dyachuk explained that "pricing is complicated" and, indeed, his spreadsheet includes hundreds of parameters. However, after reviewing his numbers, I can say that the list is impressively exhaustive, covering server memory, disk, and bandwidth, but also backups, storage, staffing, and networking infrastructure.

Precise pricing is complicated, but the problem is

much simplified by two realizations: usually a few aspects are

going to dominate the pricing, and anyhow because of the great

and different and changing anisotropy of many workloads and

infrastructures precise pricing of an infrastructure is

futile, and round up by a factor of 2

is

goping to be an important design technique, to a point.

For servers, he picked a Supermicro chassis with 224 cores and 512GB of memory from the first result of a Google search. Once amortized over an aggressive three-year rotation plan, the $25,000 machine ends up costing about $8,300 yearly. To compare with Amazon, he picked the m4.10xlarge instance as a commonly used standard, which currently offers 40 cores, 160GB of RAM, and 4Gbps of dedicated storage bandwidth. At the time he did his estimates, the going rate for such a server was $2 per hour or $17,000 per year.

That three year assumed lifetime is absiurdly short for many organizations: they will amortize the systems over 3 years if tax laws allows it because it saves tax (if the organization is profitable), but they will keep it around for usually much longer. The cloud provider will do the same, but still charge $2 per hour as long as they can.

So, at first, the physical server looks like a much better deal: half the price and close to quadruple the capacity. But, of course, we also need to factor in networking, power usage, space rental, and staff costs.

And that is the big deal: for some business models the cost of electricity (both to power and cool) is going to be bigger than the price of the system. But at the same time within limits the incremental cost will be much lower than the average cost, and in some others much bigger, bigger costs can be highly quantized (for example incremental costs can be very low while an existing datacenter is being filled up and very high if a new datacenter has to be built). The advantage or disadvantage of cloud pricing is that incremental and average cost are always the same.

Which brings us to the sensitive question of staff costs; Dyachuk described those as "substantial". These costs are for the system and network administrators who are needed to buy, order, test, configure, and deploy everything. Evaluating those costs is subjective: for example, salaries will vary between different countries. He fixed the person yearly salary costs at $250,000 (counting overhead and an actual $150,000 salary) and accounted for three people on staff.

That $250,000

per person labour cost seems very high

to me, and might apply only to San

Francisco based staff even in the USA.

Dyachuk also observed that staff costs are the majority of the expenses in a colocation environment: "hardware is cheaper, but requires a lot more people". In the cloud, it's the opposite: most of the costs consist of computation, storage, and bandwidth.

The system and network administrators are needed whether using a cloud system or a colocated or in-house system, because the cloud vendors do system administration of the virtualization platform, which is invisible to users, not of the virtualized systems.

The difference is between spinning up

or

installing systems, so the additional labour costs of a

colocated or in-house system are almost entirely in

procurement and physical installation and maintenance of the

network and systems infrastructure, and they are not

large.

The software defined

configuration of a

set of virtual networks and systems is going to be much the

same whether they are virtual or physical,

lots and lots of program code.

That of course applies even more so to the system and network administration of a large and complex cloud platform, and the related costs are going to be part of the price quoted to customers, where for colocated or in-house systems they need not exist, unless the organization decide to create their own large and complex private cloud infrastructure, which is a self inflicted issue.

Staff also introduce a human factor of instability in the equation: in a small team, there can be a lot of variability in ability levels. This means there is more uncertainty in colocation cost estimates.

Only for very small infrastructures.

Once the extra costs described are factored in, colocation still would appear to be the cheaper option. But that doesn't take into account the question of capacity: a key feature of cloud providers is that they pool together large clusters of machines, which allow individual tenants to scale up their services quickly in response to demand spikes.

Here there are several cases: predictable and gentle long term changes of demand don't require the on-the-spot flexibility of large pools of cloud resources, they can be handled with trivial planning of hardware resources.

What cloud resources can handle within limits is unpredictable changes in resource usage, or predictable but quite large (but not large compared to the cloud ecosystem) ones. Unfortunately in the real world those are relatively rare cases except for small amounts of demand.

Note: variability of capacity and

demand happens all the time in the case of shared

infrastructures of all types, whether is it is the

electricity grid or transport systems. For example if usage

is predictably very variable for everybody in specific

periods, for example supplying shops for the end-of-year

shopping season, there is no way around it other than

building massive overcapacity to handle peak load, or accept

congestion. For electricity grids there is

a big difference

between sources of firm capacity

providers and dispatchable generation

to

balance

variable non-dispatchable

generation, such as wind farms or to handle variable

demand.

Self-hosted servers need extra capacity to cover for future demand. That means paying for hardware that stays idle waiting for usage spikes, while cloud providers are free to re-provision those resources elsewhere.

Only if aggregate demand is much larger than individual

demand, an individual demands are uncorrelated. In practice

virtual infrastructure providers need to have significant

spare dispatchable

capacity too.

Satisfying demand in the cloud is easy: allocate new instances automatically and pay the bill at the end of the month.

Easy does not mean cheap, and if the cloud provider needs to have significant dispatchable capacity it will not be cheap.

So the "spike to average" ratio is an important metric to evaluate when making the decision between the cloud and colocation.

It is not just the "spike to average" ratio

it is

also whether that ratio across could users are correlated

or uncorrelated. For example the "spike to average"

ratio

of my house heating demand is huge between winter

and summer for some reason, but I suspect that for the same

reason most people withing several hundred kilometers of me

will have the same "spike to average" ratio

at the

same time.

Note: electricity grids could be built that bridge regions on opposite longitudes to balance day and night demand or on opposite latitudes to balance winter and summer demand, but that might be really expensive. A worldwide computer grid can and has been built, at a cost, but latency issues prevent full capacity balancing.

Colocation prices finally overshoot cloud prices after adding extra capacity and staff costs. In closing, Dyachuk identified the crossover point where colocation becomes cheaper at around $100,000 per month, or 150 Amazon m4.2xlarge instances, ...

That is a pretty low point but the comparison with m4.2xlarge instances is ridiculously unfair to the cloud case: a big reason to create a virtual cloud infrastructure is that medium-size servers somewhat more cost efficient than small servers, so buying a few large physical servers to run many smaller virtual servers may be cost effective, if many small servers are what the application workload runs best on. But a cloud infrastructure is already virtualized, so it makes sense to rent small instances sizes, not the same size of the physical servers one would otherwise buy.

He also added that the figure will change based on the workload; Amazon is more attractive with more CPU and less I/O. Inversely, I/O-heavy deployments can be a problem on Amazon; disk and network bandwidth are much more expensive in the cloud. For example, bandwidth can sometimes be more than triple what you can easily find in a data center.

Storage on the cloud is usually very expensive indeed, and while networking can be expensive it is usually expensive only outside the cloud infrastructure, for example having web frontends cloud hosted and storage backends elsewhere. This is influenced by the cost of virtualization, and the anisotropy of storage capacity.

So in the end a cloud rented systems can be cost effective when these conditions apply:

rootkit.

As a rule I think that all those conditions are rarely met for any significant (100 and over) sets of systems, unless quick setup or turning capital into operating expenditure really matter. The major case in which the technical conditions are met is for a public-content distribution network, but then large ones use as a rule not third party virtualized infrastructures, but lots of physical hardware colocated around the world.

He also emphasized that the graph stops at 500 instances; beyond that lies another "wall" of investment due to networking constraints. At around the equivalent of 2000-3000 Amazon instances, networking becomes a significant bottleneck and demands larger investments in networking equipment to upgrade internal bandwidth, which may make Amazon affordable again.

High bandwidth networking is falling in price and large cloud providers don't have significant economies with respect the midsize or large organizations.

But more importantly a scale of the equivalent of

2000-3000 Amazon instances

the infrastructure is so huge

that having an in-house data center is pretty much always the

most cost-effective solution, because of flexbility and being

well beyond the scale at which make-versus-buy resolves

favourably for buying. Even if the costs and complexities of

maintaining a vast software defined

infrastructure are likely to be bigger than that

difference.

The only reason why an organization was reported as having:

2,000 servers from Amazon Web Services (AWS), Amazon.com’s cloud arm, and paid more than $10 million in 2016, double its 2015 outlay.

That $10 million

per year can pay for a very nice set

of data centres, quite a lot of hardware, quite a bit of

colocated servers too, and use of a third-party public-content

distribution network, and getting to 2,000 servers

in

the cloud instead could only be due to the short-term ease for

each project in the organization to just add an extra line

item to their operation expense budget that for each project

was not particularly noticeable but across all project built

up to a big number, and indeed the same source said that it

was:

the largest ungoverned item on the company’s budget... meaning no one had to approve the cloud expenses.

So yesterday I summarized with some examples and analysis the weird way in which the Rust programming language allows function name overloading, and added some notes on infix notation.

I have in the meantime found that almost at the same time

another blogger has

written a post about overloading in Rust.

The post is somewhat interesting even if the terminology and

some of the concepts are quite confused, starting with that

between functions, their names, and methods

However it is quite interesting in abusing the trait system:

it is possible to create a dummmy

type like

struct O; (with no fields), and then write this

(much simplified from the original blogger):

use std::fmt::Debug;

struct O;

trait FOverloading<T,U>

{

fn f(t: T,u: U);

}

impl FOverloading<i32,f32> for O

{

fn f(t: i32,u: f32) { println!("t {:?} u {:?}",t,u); }

}

impl FOverloading<bool,char> for O

{

fn f(t: bool,u: char) { println!("t {:?} u {:?}",t,u); }

}

fn main()

{

O::f(1i32,2.0f32);

O::f(false,'c');

}

Update 180313: To be more explicit, the

following is not accepted, the introduction of two traits or a

pointlessly generic trait is essential, even if the two

definition of f are identical to those above

which are accepted. Another way to say this is that

impl of functions is not allowed, but indirectly

via a struct or a trait, and

multiple impl of a function with the same name

can happen only via impl of a trait.

impl O

{

fn f(t: i32,u: f32) { println!("t {:?} u {:?}",t,u); }

fn f(t: bool,u: char) { println!("t {:?} u {:?}",t,u); }

}

The obvious point made in my preceding blog is that if

f can be overloaded using two templated traits

for O, then O and the trait

FOverloading are redundant palaver.

To explore infix function call notation, whatis called method invocation in Rust terminology, I have written some simples examples that are also fairly weird:

use std::ops::Add;

struct S1 { i: i32, }

struct S2 { f: f32, }

fn addto_a(v: &mut i32,n: i32) -> () { *v = (*v)+n; }

/* Fails because non-trait function names cannot be overloaded */

// fn addto_a(v: &mut f32,n: f32) -> () { *v = (*v)+n; }

/* Fails because 'self' can only be used in 'trait' functions */

// fn addto_b(self: &mut i32,n: i32) -> () { *self = (*self)+n; }

// fn addto_c(n: i32,self: &mut i32) -> () { *self = (*self)+n; }

fn addto_g<T>(v: &mut T,n: T) -> ()

where T: Add<Output=T> + Copy { *v = (*v)+n; () }

trait Incr<T>

where T: Add

{

fn incrby_a(v: &mut Self,n: T) -> ();

fn incrby_b(self: &mut Self,n: T) -> ();

/* Fails because only the first argument *can* be named 'self' */

// fn incrby_c(n: T,self: &mut Self) -> ();

}

impl Incr<i32> for S1

{

fn incrby_a(v: &mut Self,n: i32) { v.i += n; }

fn incrby_b(self: &mut Self,n: i32) { self.i += n; }

// fn incrby_c(n: i32,self: &mut Self) { self.i += n; }

}

impl Incr<f32> for S2

{

fn incrby_a(v: &mut Self,n: f32) { v.f += n; }

fn incrby_b(self: &mut Self,n: f32) { self.f += n; }

// fn incrby_c(n: f32,self: &mut Self) { self.f += n; }

}

fn main()

{

let mut s1 = S1 { i: 1i32 };

let mut s2 = S2 { f: 1.0f32 };

addto_a(&mut s1.i,1i32);

// addto_a(&mut s2.f,1.0f32);

addto_g(&mut s1.i,1i32);

addto_g(&mut s2.f,1.0f32);

Incr::incrby_a(&mut s1,1i32);

Incr::incrby_a(&mut s2,1.0f32);

/* Fails for infix the first argument *must* be named 'self' */

// s1.incrby_a(1i32);

// s2.incrby_a(1.0f32);

Incr::incrby_b(&mut s1,1i32);

Incr::incrby_b(&mut s2,1.0f32);

s1.incrby_b(1i32);

s2.incrby_b(1.0f32);

// s1.incrby_c(1i32);

// s2.incrby_c(1.0f32);

}

It looks to me that the Rust designers created some needless distinctions and restrictions:

addto_a and and functions associated with

types via traitslike

incrby_a and incrby_b):

note: `addto_a` must be defined only once in the value namespace of this modulewhich suggests that somehow the Rust designers regard function names associated with different traits to be in different namespaces.

addto_g.self can only be used in functions associated

with traits, even if they can be used in prefix syntax just

like self-standing functions, as in both Incr::incrby_b(&mut s1,1i32);

and s1.incrby_b(1i32);.These restrictions and limitations are pretty much pointless and confusing. If there is a deliberate intent it seems to be that the recommended style is:

A commenter points out that requiring overloading and genericity in templates to be via traits constrains the programmer to think ahead about the relationships between function names and types:

There is at least one downside: it cannot be an afterthought.

In C++, ad-hoc overloading allows you to overload a function over which you have no control (think 3rd party), whereas in Rust this is not actually doable.

That being said, ad-hoc overloading is mostly useful in C++ because of ad-hoc templates, which is the only place where you cannot know in advance which function the call will ultimately resolve to. In Rust, since templates are bound by traits, the fact that overloading cannot be an afterthought is not an issue since only the traits functions can be called anyway.

Conceivably that could be an upside, but the designers of Rust don't seem to have made an explicit case for this restriction.

Genericity, whether static (what is called templates

by some) or dynamic (very

incorrectlly called dynamic typing

by many)

arises from overloading

,

where the same function name (sometimes called appallingly the

message

) can resolve on context to many

different function implementations (sometimes called

appallingly the method

).

After a brief online discussion about overloading in the Rust programming language I have prepared a small example of how overloading and genericity are handled in it, which is a bit weird:

struct S1 { i: i32, }

struct S2 { f: f32, }

fn incr_s1(v: &mut S1) { v.i += 1i32; }

fn incr_s2(v: &mut S2) { v.f += 1.0f32; }

trait Incr { fn incr(self: &mut Self) -> (); }

impl Incr for S1 { fn incr(self: &mut Self) { self.i += 1i32; } }

impl Incr for S2 { fn incr(self: &mut Self) { self.f += 1.0f32; } }

fn incr<T>(v: &mut T)

where T: Incr

{ Incr::incr(v); }

fn main()

{

let mut s1 = S1 { i: 1i32 };

let mut s2 = S2 { f: 1.0f32 };

/* Cannot overload 'incr' directly */

incr_s1(&mut s1);

incr_s2(&mut s2);

/* But 'Incr::incr' can be overloaded */

Incr::incr(&mut s1);

Incr::incr(&mut s2);

/* And so is consequently '.incr' */

s1.incr();

s2.incr();

/* But can overload 'incr' via explicit genericity */

incr(&mut s1);

incr(&mut s2);

}

In the above functions incr_s1 and

incr_s2 cannot have the same name, so direct

overloading is not possible.

However by defining a suitable trait

Incr with function signature incr in

it the overloading becomes possible if the function name is

qualified by the trait name as Incr::incr, fairly

needlessly, unless the function name is used in an

infix position as .incr in which case the trait

name is not (always) necessary.

Note: in the case of the infix notation

.incr that is possible only if the first

parameter is explicitly called

; also

only the first parameter can be called

self

. Which means that really the rule

should be that infix call notation is equivalent to prefix

call notation, regardless of the name of the first

parameter.self

But it gets better: by defining a template function that

expands to calls of Incr::incr it is possible to

overload the plain name incr itself.

This palaver is entirely unncessary in the general case. However there is a weak case for relating overloaded function names to the function bodies that implement them:

signaturesas well as names to implementations, which may be a useful restriction.

The latter point about signatures perplexes me most: without thinking too hard about it, that seems quite pointless, because it can be undone as in the example above by creating suitable template functions, and it just leads to verbosity, which seems unnecessary.

But the general impression is that the designers of Rust just assumed that overloading is derived from genericity, when in the general case it is the viceversa.

It just occurred to me that there is a way to summarize many of my previous arguments about app stores and virtualization and containerization: disposable computing.

In effect the model adopted for consumer level

computing by Apple, Google/Android, and MS-Windows is that

computing products, whether hardware or software, are

disposable "black boxes": no user serviceable parts inside,

whether it is replaceable battery packs or updates to the

Android system on a tablet, or abandonware

applications under MS-Windows.

When the product becomes too unreliable or stops working,

instead of fixing it, the user throws it away and buys a news

one. That was the model pioneered by the computer games

industry both for PC games and console

games, and gamification

is now prevalent:

in effect when a consumer buys a cellphone or a tablet or even

a PC they are buying a "use-and-throw-away" game, and iOS or

Android or MS-Windows 10 and their core applications are

currently games

that consumers play.

Disposable products have conquered consumer computing, and have been taking over enterprise computing too: the appeal is based on doing away with maintenance costs, and the ease of disposing of old applications and systems with a click on the web page of a cloud provider account.

The difficulty is that for both consumers and enterprises the disposable computing model only really works for a while, because actually the requirements of both people and businesses are much longer than disposable product lifetimes:

Sure the data can go to the cloud

, or one

can just move to a cloud

application but

that just shifts the long term maintenance burden, does not

eliminate it, and there is considerable churn also among cloud

storage and application. Also moving data and retraining from

one cloud

provider to another can be very,

very hard and expensive, as most providers try to discourage

that to achieve lock-in.

If it were easy to just throw away old messy stuff and buy new pristine stuff then major well managed IT organizations would not be buying very expensive Windows 2003 extended service packs still...

Six years ago

I bought a 256GB Crucial M4

flash

SSD

for my desktop that has ended up in my laptop as my main

storage unit for that period. Six years is a long time, and

just-in-case I replaced it with a new

Toshiba Q300 pro

of the same capacity. It has pretty much the same speed

(limited by the SATA2 interface) and characteristics. I put

the M4 in my older laptop that I keep as a spare, and it is

still working perfectly, and has had no problems, no bad

sectors, and has only used up 6% of its endurance

:

leaf# smartctl -A /dev/sda smartctl 6.2 2013-07-26 r3841 [x86_64-linux-4.4.0-116-generic] (local build) Copyright (C) 2002-13, Bruce Allen, Christian Franke, www.smartmontools.org === START OF READ SMART DATA SECTION === SMART Attributes Data Structure revision number: 16 Vendor Specific SMART Attributes with Thresholds: ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE 1 Raw_Read_Error_Rate 0x002f 100 100 050 Pre-fail Always - 0 5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0 9 Power_On_Hours 0x0032 100 100 001 Old_age Always - 48774 12 Power_Cycle_Count 0x0032 100 100 001 Old_age Always - 1212 170 Grown_Failing_Block_Ct 0x0033 100 100 010 Pre-fail Always - 0 171 Program_Fail_Count 0x0032 100 100 001 Old_age Always - 0 172 Erase_Fail_Count 0x0032 100 100 001 Old_age Always - 130 173 Wear_Leveling_Count 0x0033 094 094 010 Pre-fail Always - 180 174 Unexpect_Power_Loss_Ct 0x0032 100 100 001 Old_age Always - 167 181 Non4k_Aligned_Access 0x0022 100 100 001 Old_age Always - 109 26 82 183 SATA_Iface_Downshift 0x0032 100 100 001 Old_age Always - 0 184 End-to-End_Error 0x0033 100 100 050 Pre-fail Always - 0 187 Reported_Uncorrect 0x0032 100 100 001 Old_age Always - 0 188 Command_Timeout 0x0032 100 100 001 Old_age Always - 0 189 Factory_Bad_Block_Ct 0x000e 100 100 001 Old_age Always - 81 194 Temperature_Celsius 0x0022 100 100 000 Old_age Always - 0 195 Hardware_ECC_Recovered 0x003a 100 100 001 Old_age Always - 0 196 Reallocated_Event_Count 0x0032 100 100 001 Old_age Always - 0 197 Current_Pending_Sector 0x0032 100 100 001 Old_age Always - 0 198 Offline_Uncorrectable 0x0030 100 100 001 Old_age Offline - 0 199 UDMA_CRC_Error_Count 0x0032 100 100 001 Old_age Always - 0 202 Perc_Rated_Life_Used 0x0018 094 094 001 Old_age Offline - 6 206 Write_Error_Rate 0x000e 100 100 001 Old_age Always - 0

I find it quite impressive still. Actually the Q300 Pro has a couple of small advantages: the FSTRIM operation seems to have much lower latency, and it has a temperature sensor. It also costs 1/4 of what the M4 cost 6 years ago, but even for flash SSDs the cost decrease curve has flattened in recent years.

Part of the reason I switched is not only precaution about a very critical (even if I do a lot of backups) component getting old, but because my spare old laptop still had a (pretty good) rotating disk, and doing package upgrades on that it very slow, as they require (because of not very good reasons)) a lot of random small IOPS. With a flash SSD my occasional upgrades of its root filetree are way faster. There is also the great advantage that flash SSDs are very resistant to mechanical issues like falling inside a dropped lptaop, so I really want to use disk drives only for backup and inside deskptops for mostly-cold data like my media and software collections.

After releasing an 32GB Optane cache module Intel have finally released an Optane SSD line as the NVMe card and 2.5in drive model DC P4800X in capacities of 375GB, 750GB, 1500GB, with prices around £3 per GB (VAT included) compared to around £0.5 per GB for similar NVMe flash SSDs.

Intel have published some tests as a Ceph storage unit but they are a bit opaque as the Optane devices are only used as DB/WAL caches/buffers together with 4TB flash SSDs.

On pages 11 and 12 however it is interesting to see that faster CPU chips deliver significantly better IO rates, which means that these solid state devices are in part limited by memory-bus capacity.

On pages 20 and 21 also interesting to see that Intel are preparing to deliver Optane RAM modules, as long anticipated, and confirmation that latencies for Optane are very much better than those for flash SSDs, at around 10µs versus 90µs for a 4KiB read.

There are more details in some third party tests (1, 2, 3) and the (somewhat inconsistent) results are:

Note: some results indicate writes are rather slower than reads, which is what I would expect given the profile of XPoint memory, so perhaps the tests that give higher write speeds than expected are for uncommitted writes, or else the P4800X has some really clever or huge write buffering.

The numbers are for devices of equivalent capacity, but given the much lower cost of flash SSDs larger and faster SSDs can be used which might have significantly higher sequential rates.

Overall my impression is that very approximately speaking on troughput Optane gives a 2-3 times improvement over flash SSDs for 6 times higher cost, and the improvement in latency is higher than the increase in cost.

There has been some recent debate (1, 2) how distributions should package unmaintainable applications:

It's now typical for hundreds of small libraries to be used by any application, often pegged to specific versions. Language-specific tools manage all the resulting complexity automatically, but distributions can't muster the manpower to package a fraction of this stuff.

The second sentence is quite wrong: those

Language-specific tools

don't manage at all that

complexity, they simply enable it. All those APIs and

dependencies are not managed in any way, an application that

uses all those hundreds of randomly installed compents work

right just by coincidence, as far as the language-specific

tools go. Those language-specific tools are not much better

than git clone or the old make install as

to managing complexity.

Also no developer is forced to write applications that have hundreds of dependencies, nor vast collections of tiny libraries: applications and libraries can be deliberately designed for cohesiveness and maintainability. Choosing the granularity of a software unit is an important design choice, and developers are free to choose a larger, more convenient granularity over a smaller one.

I can only speak about the Haskell community. While there are some exceptions, it generally is not interested in Debian containing Haskell packages, and indeed system-wide installations of Haskell packages can be an active problem for development. This is despite Debian having done a much better job at packaging a lot of Haskell libraries than it has at say, npm libraries. Debian still only packages one version of anything, and there is lag and complex process involved, and so friction with the Haskell community.

On the other hand, there is a distribution that the Haskell community broadly does like, and that's Nix. A subset of the Haskell community uses Nix to manage and deploy Haskell software, and there's generally a good impression of it. Nix seems to be doing something right, that Debian is not doing.

This is far from surprising: the packaging model of Nix is

the old depot

model, which is basically the

same used by most language-specific tools, and which crucially

enables having a whatever

approach to

software configuration management: having many different

siloses of different collections of randomly assembled

components that for the time being seem to work together.

things like Docker, that also radically rethink the distribution model. We could easily end up with some nightmare of lithification, as described by Robert "r0ml" Lefkowitz in his Linux.conf.au talk. Endlessly copied and compacted layers of code, contained or in the cloud. Programmer-archeologists right out of a Vinge SF novel.

Well, yes, but the entire business case of Docker is in practice

lithification

(1,

2)

to make application environments into black boxes that are

maintained by the developers of the application, that is in

practice soon by nobody.

Note: lithification is often achieved

by system emulation/virtualization, and D

Knuth in Art of Computer

Programming wrote that entirely too much

programmers' time has been spent in writing such simulators

and entirely too much computer time has been wasted in using

them

, already decades ago.

In ancient times lithification had a jargon name: the dusty deck

, that is an application written as

a pack of punched cards, consolidating lots of modules in one

pack, that usually gathered dust and would be occasionally

re-run when needed.

A current trend in software development is to use programming languages, often interpreted high level languages, combined with heavy use of third-party libraries, and a language-specific package manager for installing libraries for the developer to use, and sometimes also for the sysadmin installing the software for production to use. This bypasses the Linux distributions entirely. The benefit is that it has allowed ecosystems for specific programming languages where there is very little friction for using libraries written in that language to be used by developers, speeding up development cycles a lot.

That seems to me a complete misdescription, because by itself doing language specific package management is no different from doing distribution specific package management, the rationale for effective package management is always the same.

My understanding is that things now happen more like this: I'm developing an application. I realise I could use a library. I run the language-specific package manager (pip, cpan, gem, npm, cargo, etc), it downloads the library, installs it in my home directory or my application source tree, and in less than the time it takes to have sip of tea, I can get back to developing my application.

This has a lot less friction than the Debian route. The attraction to application programmers is clear. For library authors, the process is also much streamlined. Writing a library, especially in a high-level language, is fairly easy, and publishing it for others to use is quick and simple.

But the very same advantages pertained to

MS-Visual Basic

programmers under MS-Window 95

over 20 years ago, and earlier,

because they are not the advantages of using a

language-specific package manager

that downloads the

library, installs it in my home directory

, but of some

important simplifications:

Simply avoiding all build, portability, maintainability issues does result in a lot less development friction (1, 2) and the famous book The mythical man-month several decades ago already reported that simply developing an application in ideal circumstances is easy, but:

Finally, promotion of a program to a programming product requires its thorough documentation, so that anyone may use it, fix it, and extend it. As a rule of thumb, I estimate that a programming product costs at least three times as much as a debugged program with the same function.

Moving across the vertical boundary, a program becomes a component in a programming system. This is a collection of interacting programs, coordinated in function and disciplined in format, so that the assemblage constitutes an entire facility for large tasks. To become a programming system component, a program must be written so that every input and output conforms in syntax and semantics with precisely defined interfaces. The program must also be designed so that it uses only a prescribed budget of resources — memory space, input-output devices, computer time.

Finally, the program must be tested with other system components, in all expected combinations. This testing must be extensive, for the number of cases grows combinatorially. It is time-consuming, for subtle bugs arise from unexpected interactions of debugged components.

A programming system component costs at least three times as much as a stand-alone program of the same function. The cost may be greater if the system has many components.

The avoidance of most system engineering costs has always

been the attraction of workspace

development environments, like

LISP machines

(1,

2,

3)

or the legendary

Smalltalk-80

or even the amazing

APL\360

(1,

2)

or across the decades of using GNU EMACS and EMACS

LISP: developing single language prototype applications

in optimal and limited conditions is really quite easier than

engineering applications that are products.

Note: it is little known in proper IT culture, but there is a huge subculture in the business world of developing, selling, or just circulating huge MS-Excel spreadsheets with incredibly complicated and often absurd internal logic. This is enabled by MS-Excel being in effect a single language prototyping environment too.

Developing cheaply single language unengineered prototypes

can be a smart business decision, especially for startup

where demonstrating a prototype is

more important than delivering a product, at least

initially.

The problem for systems engineers with that approach, whether

it was a smart business choice or just

happened

, is that inevitably some such applications become

critical and later turn into abandonware

and engineering in the form of portability and maintainability

has to be retrofitted to them, or they have to be frozen

.

The freezing

of unmaintainable legacy

prototypes is an ancient issue, there have been reports of

such applications for IBM 1401 systems running in a frozen

environments inside an IBM/360 emulator running inside an

emulator for a later mainframe.

I wish I had spent less time unraveling bizarre issues with

whatever

applications written frictionlessly

for R, Python",

Matlab, Mathematica. But the temptation for

developers (and their managers) to take development shortcuts

and then tell systems engineers deal with

it

is very large, also because it dumps a large part of

the IT budget of an organization on the system engineering

team. I suspect that containerazation, where whatever

applications are isolated withing

environments that are then pushed back to developers, is a

reaction to such dumping.

Going back to GNU/Linux and other distributions, the story with them is that they are by necessity not just single interpreted language workspaces, but they are collections of very diverse system components, and a single distribution packages may contain code from several languages customized for several different system types to be compiled to several different architecture types. Traditional packaging is a necessity for that.

I have mentioned before that the economic case for virtualization rests in large part on 4U servers being cheaper per unit of capacity then smaller servers but not by much, and that infrastructure consolidation beyond that does not offer much in terms of economies of scale or scope.

Perhaps that needs explaining a bit more, so I'll make the classic example of economies of scale: in order to make car body parts the best method is a giant and expensive press that can shape in one massive hammer action an ingot of steel into a body part, like a door. Anything smaller and cheaper than that press requires a lot more time and work; but conversely anything bigger does not give that much of a gain. Another classic example is a power station: large turbines, whether steam or water powered, have significant better cost/power rations than smaller ones, in part I suspect because the central area is a smaller proportion of the total inlet area.

In computing (or in finance or in retail) such effects don't happen beyond a low scale: sure, 4U servers are rather cheaper than desktops per unit of computing capacity delivered, and (not much) larger cooling and power supply systems are more efficient than pretty small ones, but these gains taper off pretty soon. Wal*Mart and Amazon in retail have similar profiles: their industrial costs (warehousing, distribution, delivery) are not significantly lower than their smaller competitors, they simply accept smaller margins on a bigger volume of sales.

Are there economies of scope in computer infrastructures?

Perhaps if they are very highly uniform: software installation

on 10,000 nearly identical computers is rather parallelizable

and thus costs pretty no much more than on 1,000 in terms of

manpower, but very highly uniform infrastructures only work

well with embarassingly parallelizable

workloads, like content delivery networks, and even so very

highly uniform infrastructures often don't stay such for

long. There is the problem that

heterogeneous workloads don't work well on homogeneous systems.

However in some corporate sectors I am familiar with there is

a drive to consolidate several heterogeneous workloads on

unified homogenous infrastructures via techniques like

containers

and virtualization, even if there is no obvious demonstration

that this delivers economies of scale or scope. In part this

is because of corporate fads, which are an important influence

on corporate careers, but I think that there is an important

other motivation: cutting costs by cutting service levels, and

obfuscating the cut in service levels with talk of economies

of scale or scope.

Consider the case where rather 6 different IT infrastructure each with 200 servers and 8 system engineers each are consolidated into a single one with 800 servers running 1,200 virtual machines or containers and 36 system engineers.

In that case there are likely zero economies of scale or scope: simply service levels (system responsiveness, system administrator attention) have been cut by 1/3 or more. Because a 200 server infrastructure with a 8 system engineers is probably already beyond the level at which most economies of scale or scope have already been mostly used.

Such a change is not a case of doing more with less, but doing less with less, and more than linearly so, because while the hardware and staff have been cut by 1/3, the actual workload of 1,200 systems has not been cut.

The inevitable result is that costs will fall, but also service levels will fall, and with reduced hardware and staff for the same workload the only way to cope will be to cut maintenance and development more than proportionately, which is usually achieved by freezing existing infrastructures in place, as every change becomes more risky and harder to do, that is doing rather less with less.

Doing rather less with less is often a perfectly sensible thing to do from a business point of view, when one has to make do with less resources and doing rather less does not have a fatal effect on business, and can be reversed later. Obfuscating the swiotch to doing rather less with less with an apparent change of strategy can also be useful from a business point of view, as appearances matter. But sometimes I have a feeling that is not a deliberate choice.

Consolidation can be positive at a fairly low scale, where there can be economies of scale and scope: consider 1,000 diverse servers and 50 system engineers, divided into 25 sets with 40 servers and 2 system engineers each: that might be highly inconvenient as:

There might be a case to consolidate a bit, into 5 groups each with 200 servers and 10 (or 8 perhaps) system engineers each (so that coverage becomes a lot easier), but I think that it would be hard to demonstrate economies of scale or scope beyond that.

Having mentioned previously the additional cost and higher complexity of both public and private virtualized infrastructures, they arise in large part from a single issue: that the mapping between dependencies of virtual systems and hardware systems is arbitrary, and indeed it must be arbitrary to enable most of the expected cost savings of treating a generic hardware infrastructure as an entirely flexible substrate.

Since the mapping is arbitrary resilience by redundancy becomes unreliable within a single cloud: some related virtual instances might well be hosted by the same hardware system, or or by some hardware systems attached to the same switch, etc.

It is is possible to build virtualized infrastructures that expose hardware dependencies to the administrators of the virtual layer and that allow them to place virtual infrastructure onto specific parts of the hardware infrastructure, but this soon becomes unmaintainably complex, as the hardware infrastructure changes, or removes most of the advantages of virtualized infrastructures, if the placements are static, so that the hardware layer can no longer be treated as an amorphous pool of resources.

Therefore most virtual infrastructures choose a third, coarser approach: availability zones (as they are called in AWS) which are in practice entirely separate and independent virtual infrastructure, so that the placement of virtual systems can be in different zones for redundancy.

In theory this means that services within a zone can arbitrarily fail, and only inter-zone replication gives a guarantee of continuity. This leads to extremely complex service structures in which all layers are replicated and load-balanced across zones, including the load balancers themselves.

To some extent this is not entirely necessary: several public virtual infrastructures give availability guarantees within a zone, for example at most 4 hours a year of outage or 99.95% availability, but these are per virtual system not per zone, so if a virtual system depends on several other virtual systems in the same zone, it can happen that they fail at different times. Also it is expensive: for a given availability target the hardware infrastructure must contain a lot of redundancy everywhere, as its virtual guests can indeed be anywhere on it.

So availability zones are a symptom of the large cost of making the hardware substrate a consolidation of hardware systems into a generic virtual infrastructures: the infrastructures both have to be generically redundant within themselves, and yet they still need wholesale replication to provide redundancy to services.

Running services on physical systems instead allows case-by-case assessment of which hardware and software components need to be replicated, based on their individual requirements, probabilities of failure, and times to recovery, and in my experience with a little thinking not much complexity is required to achieve good levels of availability for most services. So virtualized infrastructures seem to be best suited to two cases, that often overlap:

But the first case can be easily handled without virtualization, and the second is very rare and expensive in its complexity, yet consolidation into virtualized infrastructures turns most applications setups into that.

In a case I saw a significant service uised by

thousands of users daily was split onto three pretty much

unrelated systems for workload balancing and for ease of

maintenance, and each system was designed for resilience

without much redundancy, except for the database backend.

The result was a service that was very highly available,

at an amazingly low cost in both hardware and maintenance, and

maintenance was made that much easier by its much simpler

configuration than had it been "continously available". Since

it was acceptable for recovery from incidents to take an hour

or two, or even half a day in most cases, running a partial

service on a subset of the systems was quite acceptable and

happened very rarely.

We also ignore that there are as a rule quite small or no benefits on cost by increasing scale by consolidation, either at the datacentre level, or at the hardware procurement and installation level, and businesses like Google that claim savings at scale achieve them by highly specializing their hardware and datacentres, to run highly specialized applications. Other companies like Amazon, for the inconvenience of having to deal with redundancy at the level of availability zones, have been charging considerably more than the cost of running a private non virtualized infrastructure.

As with storage large amorphous pools of resources don't work well, primarily because of huge virtualization overhead and the mismatch between homogenous hardware and heterogenous workloads which are highly anisotropic. I suspect that their popularity rests mainly on a fashion for centralization (in the form of consolidation) regardless of circumstances, and the initial impression that they work well when they are still not fully loaded and configured, that is when they are most expensive.

The virtualized infrastructures offered by third party

vendors have the same issues only bigger, but I suspect that

they are popular because they allow clever

CFOs

a class of capital expenses into current expenses, much the

same as leasing

does, and that sometimes is

for them worth paying a higher price.

A blog post by Canonical reports the opinions of the developers of Skype for distributing their binary package in the Snap (1, 2) containerized format (descended in part from Click) sponsored by them, similar to the Docker format (but I reckon Snap is in some ways preferable) with arguments that look familiar:

The benefit of snaps means we don’t need to test on so many platforms – we can maintain one package and make sure that works across most of the distros. It was that one factor which was the driver for us to invest in building a snap.

In addition, automatic updates are a plus. Skype has update repos for .deb and rpm which means previously we were reliant on our users to update manually. Skype now ships updates almost every week and nearly instant updates are important for us. We are keen to ensure our users are running the latest and greatest Skype.

Many people adopt snaps for the security benefits too. We need to access files and devices to allow Skype to work as intended so the sandboxing in confinement mode would restrict this.

These are just the well known app store

properties for systems like Android: each application in effect comes

with its own mini-distribution, often built using something

equivalent to make install and that mini-distribution

is fully defined and managed and updated by the developer, as

long as the developer cares to do so.

For users, especially individual users rather than organizations, it looks like a great deal: they hand over the bother of system administration to the developers of the applications that they use (very similarly to virtualized infrastructures).

Handing over sysadmin control to developers often has inconvenient consequences, as developers tend to underestimate sysadm efforts, or simply don't budget for them if the additional burden of system administration is just not free. This is summarized in an article referring to an ACM publication:

In ACMQueue magazine, Bridget Kromhout writes about containers and why they are not the solution to every problem.

Development teams love the idea of shipping their dependencies bundled with their apps, imagining limitless portability. Someone in security is weeping for the unpatched CVEs, but feature velocity is so desirable that security's pleas go unheard.

Platform operators are happy (well, less surly) knowing they can upgrade the underlying infrastructure without affecting the dependencies for any applications, until they realize the heavyweight app containers shipping a full operating system aren't being maintained at all.

Those issues affect less individual users and more organizations: sooner or later many systems accrete black-box containers that are no longer maintained, just like legacy applications running on dedicated hardware, and eventually if some sysadms are left they are told to sort that out, retroactively, in the worst possible conditions.

VLAN tags effectively just turn broadcasts into multicasts, including the initial flooding to discover a neighbor. That is not too bad, but they are also used to classify hosts into subsets that have almost no internal connectivity, which is the opposite of what is desirable.

Apart from the added complexity, that means that very little traffic happens within a VLAN, and almost all traffic is among VLANs.

Unless of course the servers for the clients on a VLAN are on the same VLAN, which means that most servers will be on most VLANs.

I was recently asked about having been hacker'ed, where some hacker affected some system I was associated with, and my answer was perhaps a long time ago. Indeed I had to think about it a bit more and try to remember.

Before giving some details the usual premise: this is not necessarily because of superhuman security of the systems that I use, it is mostly because they don't have valuable data or other reasons to be valuable (for eample I keep well clear of Bitcoin (Don't own cryptocurrencies, Kidnappers free blockchain expert Pavel Lerner after receiving $US1 million ransom in bitcoin) or online finance and trading, and therefore hackers won't invest big budgets to get them, so that diligent and careful practice and attention to detail mean that low budget attempts are foiled, because low budget attacks are targeted at low hanging fruits (trhe systems of people who are not diligent and careful, of which there are plenty).

So the only major case I can remember was around 15 years ago when a web server was compromised by taking advantage of a bug in a web framework used by a developer of an application running on the server. This allowed the hackers to host some malware on the server. While I was not that developer (but honestly I might well have been, I would not have done either a full security audit of every framework or library I would use), I was disappointed that it took a while to figure out this happened, because the hacker had taken care to keep the site looking the same, with only some links pointing to the malware.

There have been minor cases: for example even longer ago a MS-Windows 2000 system was compromised using a day-0 bug while I was installing it (just bad luck) at someone else's home and I guess in part because downloading the security updates after installation too so long, but this had no appreciable effect because I realized it was hacked (for use as a spam bot) pretty soon during that installation. There have also been a handful of cases where laptops or workstations of coworkers were hacked because they clicked on some malware link and then clicked Run, and either the antivirus had been disabled or the malware was too new.

In all cases the hacks seemed to originate from far away developing countries, which is expected as it is too risky to hack some systems in the same jurisdiction (or allied) where the hacker resides, given that the Internet gives access to many resources across the world, which has provideded many good business opportunities for developing countries, but also for less nice businesses.

As to targeted operations

, that is high

budgets hackers from major domestic or allied security

services or criminal organisations, I am not aware of any

system I know having been targeted. Again because I think it

has never been worth it, but also because high budgets allow

use of techniques, like compromised hardware, microscopic

spycams or microphones to collect passwords, firmware

compromised before delivery, that are pretty much undetectable

by a regular person without expensive equipment. If there is a

minuscule spy chip inside my keyboard or motherboard or a

power socket or a router I would not be able to find

out.

My preference is for cable and equipment labeling and tiday storage, also because when there are issues that need solving that makes both troubleshoting and fixing issue easier and quicker. Having clear labels and tidy spare cables helps a lot under pressure. Therefore I have been using some simple techniques that I wish were more common:

velcro) tapes. It is possible to buy strips, but I find more convenient to buy a roll of tape and cut it to measure. It is usuall much better to wrap with the hook side inside, and the look side outside, as the loop size is rather less likely to attach to something. Of course a lot of people use traditional nylon ties for that and they are cheaper, but velcro is cheap enough, reusable, and removable without damaging the cable. Also nylon cable ties are very inappropriate for fibre cables.

As to how to label I am aware of the standard practice in mechanical and electrical engineering to label both cables and sockets with an inventory number, and a central list of which cable to connect to which socket, but I don't like it for computer equipment because:

So my usual practice is to label both cables and sockets with matching labels, and possibly different different labels on each end, and the labels be descriptive, not just inventory numbers. For example a network connection may have a label with host name and socket number on the host side for both socket and cable end, and host name and switch name and port number on the switch side. This means that verifying the right connections are in place is very easy, and so is figuring out which cable plugs into which socket, which minimizes mistakes under pressure. The goals are: make clear what happens when a cable is disconnected, make clear which cable to disconnect to get a given effect, make clear which cable needs to be reconnected.

For me it is also important to minimize connections

specificity, so for example I usually want to ensure that

nearly all downstream

ports on a switch are

equivalent (on the same VLAN and subnet) so that they don't

need to be tagged with a system name, and the switch end of a

downstream cable does not need to be tagged with a specific

switch port. So for example I usually put on a switch

something a label such as ports 2-42 subnet

192.168.1.56

and hostname and subnet on the switch end of

downstream cables.

In order to have something like a central list of cable and socket connections I photograph with a high resolution (and with large pixels and a wideangle lens) digital photocamera the socket areas, ensuring that most of the labels be legible in the photograph. This is not as complete as a cable and socket list, but it is usually a lot more accurate, because much easier to keep up-to-date.

Note: however I do use explicit inventory numbers and a central list in come more critical and static cases, usually in addition to meaningful matching labels.

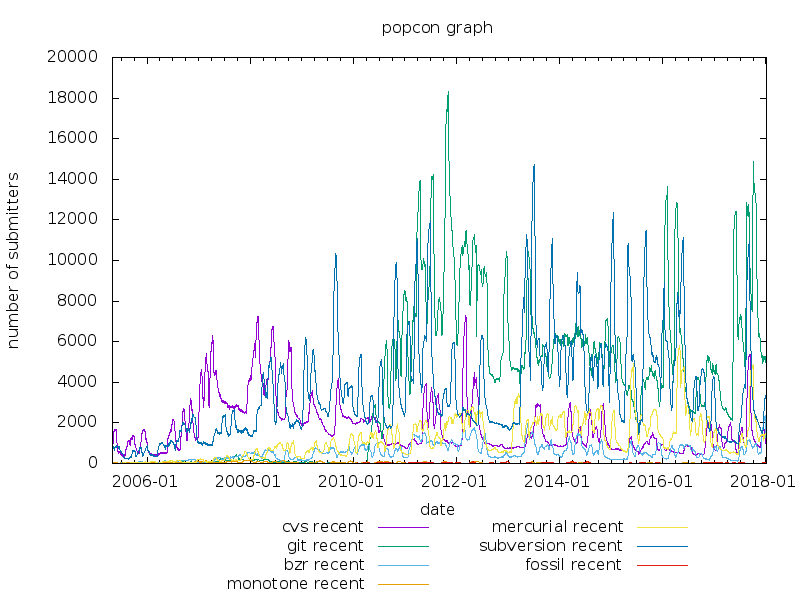

I had long had the intention to complete my draft (from

2011...) of a blog post on the four most popular distributed

version control systems

(monotone,

Mercurial,

Bazaar,

git)

but over the years both monotone and Bazaar

have lost popularity, and currently only git and

Mercurial (among distributed version control systems,

Subversion

and even

CVS

are still popular) are still widely used (with git

being more popular than Mercurial), at least according to

Debian's popcon

report:

That's sad as both monotone and Bazaar have some very good aspects, for example, but there are a lot of contrasting opinions for example there is some criticism about both monotone and Bazaar, mainly that they were much slower than git and Mercurial, especially for large projects, as their storage backends are somewhat slower than the one of git on typical disks; secondarily that monotone is too similar to git, and that Bazaar has some privileged workflows similar to Subversion.

Note: Bazaar however has been very competitive in speed since the switch to a git-like storage format in version 2.0 and some improvements in handling large directory trees (1, 2) in 2009 and while slower, monotone happened to be under the spotlight as to speed at an early and particularly unfortunate time, and has improved a lot since.

However it seems to me quite good that after a couple of

years of stasis Bazaar development has been resumed as in its

new

Breezy variant

even if the authors say that Your open source projects

should still be using Git

which is something I disagree

with, and there is a pretty good video presentation about the

Breezy project

on YouTube

and it makes the same point to be using git, and

where Mercurial is ignored.

Note: the two years of stasis have demonstrated that Bazaar, whose development started over 10 years ago, is quite mature and usable and does not need much maintenance beyond occasional bug fixes, and conversion to Python 3 which is one of the main goals of the Breezy variant, and is nearly complete.

The video presentation makes some interesting points, that at least partially contradict that repeated advice, and in my view they are largely based on the difference between the command front-end of a version control system and its back-end:

stage, the

object store,

tree,

working directory, its commands are named without any reference as to what they manipulate, so for example git commit and git reset operate on the stage and the object store and are to some extent opposite operations, and git checkout and git add operate on the working directory and the stage, and are opposite operations too.

While I like Mercurial otherwise, I think that having two storage files (revision log and revision index) per every file in the repository is a serious problem: most software packages have very many small files in part because archives are not used as much as they should be in UNIX and successors like Linux, and most storage systems cannot handle lots of small files very well, never mind tripling their number. While I like git the rather awkward command interface and the inflexibility make it a hard tool to use without a GUI interface and GUI interfaces limit considerably its power.

Bazaar/Breezy can use its native storage format, can use the git storage format, and both grow by commit, and both supporting repacking of commit files into archives, and for old style programmers it can be used at first in a fairly Subversion style workflow.

Note: while most developers seem superficially uninterested in the storage format of their version control system, it has a significant impact on speed and maintainability, as storage formats with lots of small files can be very slow on disks, for backups, for storage maintenance, for indexing. Other storage formats can have intrinsic issues: for example monotone uses SQLite2, which as a relational DBMS tries hard to implement atomic transactions, which unfortunately means that an initial cloning of a remote repository can generate either a lot of transactions or one huge transaction (the well know issue of initial population of a database), which can be very slow.

While doing a backup using pigz (instead of pbzip2) and aespipe I have noticed that the expected excellent parallelism happened very nicely, and also mostly from a Btrfs filesystem on dm-crypt that is, with full checksum checking during reads and decryption:

top - 14:46:56 up 2:05, 2 users, load average: 9.23, 3.74, 1.88 Tasks: 493 total, 1 running, 492 sleeping, 0 stopped, 0 zombie %Cpu0 : 80.5 us, 12.9 sy, 0.0 ni, 3.6 id, 2.3 wa, 0.0 hi, 0.7 si, 0.0 st %Cpu1 : 75.4 us, 13.3 sy, 0.0 ni, 0.7 id, 7.0 wa, 0.0 hi, 3.7 si, 0.0 st %Cpu2 : 82.9 us, 8.2 sy, 0.0 ni, 7.9 id, 1.0 wa, 0.0 hi, 0.0 si, 0.0 st %Cpu3 : 81.7 us, 9.2 sy, 0.0 ni, 9.2 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st %Cpu4 : 86.8 us, 7.8 sy, 0.0 ni, 5.4 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st %Cpu5 : 87.6 us, 6.0 sy, 0.0 ni, 5.7 id, 0.7 wa, 0.0 hi, 0.0 si, 0.0 st %Cpu6 : 85.5 us, 6.2 sy, 0.0 ni, 5.6 id, 2.6 wa, 0.0 hi, 0.0 si, 0.0 st %Cpu7 : 89.1 us, 5.0 sy, 0.0 ni, 5.6 id, 0.3 wa, 0.0 hi, 0.0 si, 0.0 st KiB Mem: 8071600 total, 7650684 used, 420916 free, 36 buffers KiB Swap: 0 total, 0 used, 0 free. 6988408 cached Mem PID PPID USER PR NI VIRT RES DATA %CPU %MEM TIME+ TTY COMMAND 7636 7194 root 20 0 615972 8420 604436 608.6 0.1 11:53.45 pts/7 pigz -4 7637 7194 root 20 0 4424 856 344 81.8 0.0 1:16.04 pts/7 aespipe -e aes -T 7635 7194 root 20 0 35828 11524 8892 11.9 0.1 0:24.68 pts/7 tar -c -b 64 -f - --one-file-system +